At the opening of the 2026 Consumer Electronics Show, NVIDIA founder and CEO Jensen Huang unveiled the “Rubin” platform, a significant architectural leap that marks the company’s first venture into “extreme codesign.”

Named in honor of the pioneering astronomer Vera Rubin, the platform is not merely a collection of faster chips but a holistic reimagining of the data center as a unified AI supercomputer. By integrating compute, networking, and software into a single coherent system, NVIDIA aims to solve the growing economic and physical bottlenecks of the generative AI era.

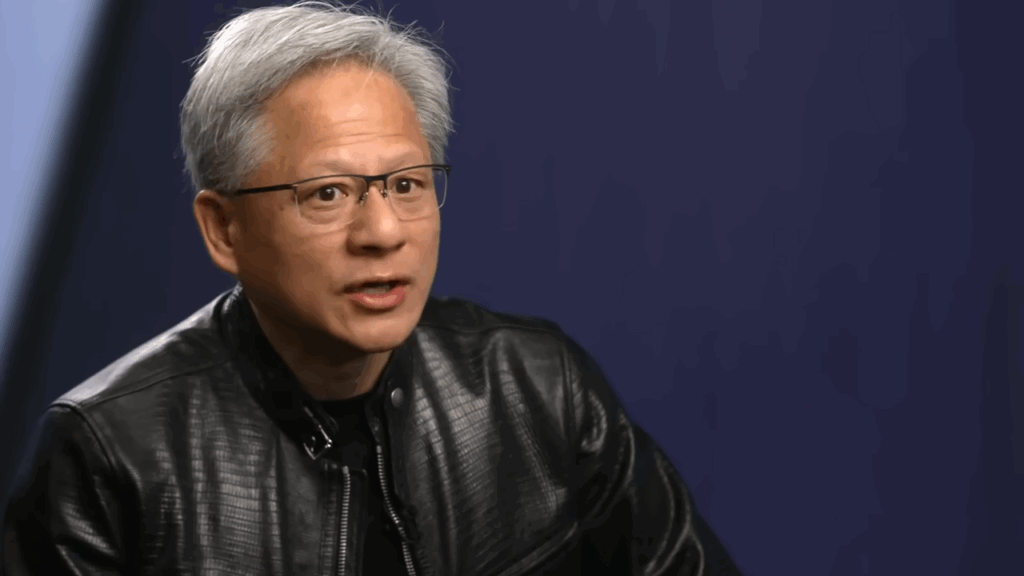

CEO Jensen Huang launches NVIDIA’s latest platform, Rubin

In the latest press release, NVIDIA announced the launch of the Rubin platform. The architecture succeeds the record-breaking Blackwell platform and arrives at a critical juncture for the tech industry. As large language models transition into “agentic AI”—systems capable of multi-step reasoning and autonomous problem-solving—the demand for inference compute has reached a fever pitch.

NVIDIA’s response is a six-chip ecosystem headlined by the new Rubin GPU and the custom Arm-based Vera CPU. The platform’s performance metrics are staggering. It delivers up to a fivefold increase in AI inference performance and a 3.5-fold boost in training efficiency compared to its predecessor. Crucially, it achieves these gains while reducing the cost of generating AI tokens to roughly one-tenth that of previous systems.

At the heart of this leap is the “Vera Rubin NVL72” rack-scale solution. This system treats the entire rack, rather than the individual server, as the fundamental unit of compute. It features 72 Rubin GPUs and 36 Vera CPUs interconnected by the sixth-generation NVLink, which provides a massive 260 terabytes per second of aggregate throughput.

The Vera CPU itself represents a major milestone for NVIDIA, sporting 88 custom “Olympus” cores that support spatial multithreading. This allows the processor to handle complex data movement and reasoning tasks with the capacity of a 176-core engine, ensuring that the high-speed GPUs are never starved for data.

NVIDIA’s Rubin is more efficient than its predecessors

Beyond raw speed, the Rubin platform addresses the massive power and logistical challenges facing modern AI factories. In a move toward total efficiency, the NVL72 is designed to be 100% liquid-cooled, featuring a tubeless and cable-free architecture. This streamlined design doesn’t just lower the environmental footprint; it also dramatically simplifies deployment.

During his keynote, Huang noted that installation times for these massive systems have been slashed from several hours to just five minutes. This focus on “time-to-market” is essential for cloud providers like Microsoft Azure, Google Cloud, and AWS, all of whom have already committed to being the first to offer Rubin-based instances in the second half of 2026.

The implications of the Rubin launch extend far into the future of global infrastructure. By making large-scale AI significantly more economical, NVIDIA is paving the way for what Huang describes as the “AI Industrial Revolution.” The platform includes advanced networking with Spectrum-6 Ethernet and BlueField-4 data processing units, engineered to support the next wave of million-GPU environments.

This shift signifies that the “AI arms race” has moved beyond software into a phase of industrial-scale production of intelligence. As enterprises begin to deploy these systems, the focus will likely shift from simply building smarter models to scaling them to billions of users at a cost that makes sense for the global economy. With Rubin, NVIDIA has once again raised the bar, reinforcing its position as the primary architect of the world’s most advanced digital infrastructure.

Also Read: Starbucks CEO Recalls How Reddit Assured The Success Of His Strategy