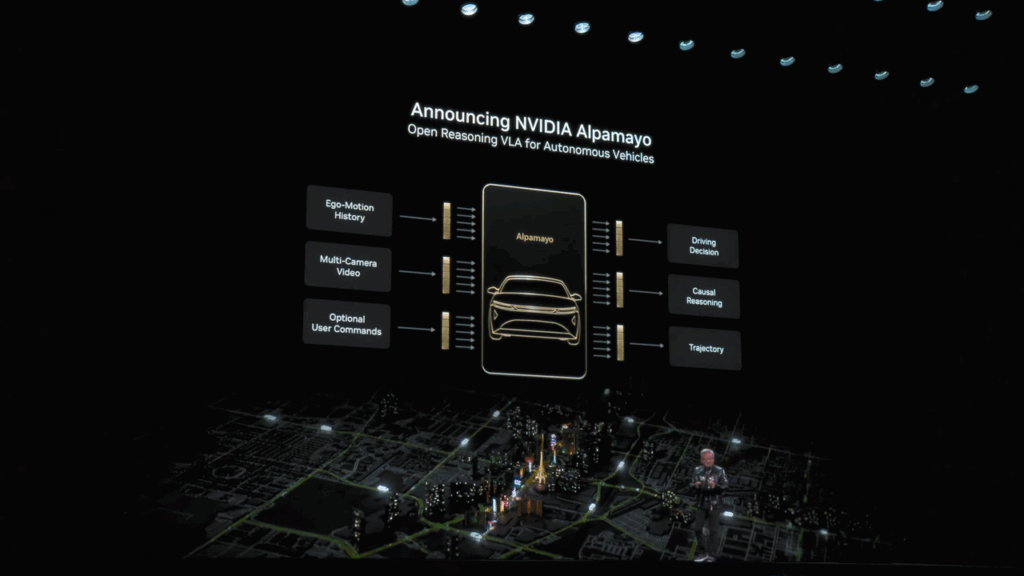

In a major strategic expansion unveiled at the 2026 Consumer Electronics Show, NVIDIA has introduced Alpamayo, a pioneering family of open-source AI models, simulation frameworks, and datasets designed to fundamentally change how autonomous vehicles perceive and navigate the world.

By integrating “chain-of-thought” reasoning into the driving process, NVIDIA is attempting to move self-driving technology beyond simple pattern recognition and toward a human-like ability to process complex, multi-step logic. CEO Jensen Huang characterized the launch as the “ChatGPT moment for physical AI,” suggesting a transition where machines no longer just observe their surroundings but actively reason through them.

NVIDIA CEO Jensen Huang announces the launch of Alpamayo

During his recent appearance at CEO 2026 in Las Vegas, NVIDIA CEO Jensen Huang made a surprising announcement of the company’s latest AI model for vehicles. According to a TechCrunch report, the release is centered on Alpamayo 1. It is a 10-billion-parameter vision-language-action (VLA) model that represents the industry’s first open reasoning architecture for autonomous vehicles. Unlike traditional “black box” systems that output steering and braking commands without explanation, Alpamayo is able to generate logic behind every trajectory.

In other words, if a vehicle encounters an unpredictable “long-tail” scenario, the system evaluates the environmental variables step-by-step. It identifies risks, anticipates likely actions of other drivers, and selects a safe path, all while providing a transparent data record of its process.

NVIDIA is positioning Alpamayo as a “teacher model” rather than a direct runtime system for every car. By releasing the model weights on Hugging Face, the company is inviting the research community and automotive partners to optimize this AI model for specific vehicle hardware.

This open-source strategy aims to accelerate the development of Level 4 autonomy by providing a standardized foundation for safety validation and regulatory auditing. Proponents of the move suggest that the ability to “ask” a vehicle why it made a specific choice is a critical requirement for building public trust and satisfying future legislative standards.

Related: LinkedIn Co-Founder Reid Hoffman Expresses Outrage Towards California’s Billionaire Tax

NVIDIA’s Alpamayo is supported by two important attributes

The Alpamayo ecosystem is built upon two crucial pillars. These are designed to make the training and testing of these systems seamless. The first is AlpaSim, an open-source, end-to-end simulation framework available on GitHub. It allows developers to test AI policies, closed-loop environments that realistically model sensor inputs and traffic dynamics.

The second pillar is a massive repository of Physical AI Open Datasets. It comprises over 1,700 hours of driving data from 25 countries. This repository encompasses rare edge cases and complex real-world conditions that are essential for training models to handle similar situations in the future.

By providing these foundational tools for free, NVIDIA is effectively setting the technical parameters for the next era of transportation. The initiative has already garnered significant attention for its focus on “neuro-symbolic AI,” which combines the perception of neural networks with the rule-based logic of symbolic reasoning.

As the industry enters 2026, the success of the Alpamayo family could determine whether the road’s future belongs to the most powerful algorithms or the most explainable ones. This move toward transparency may force a major shift across the industry, potentially making explainability a mandatory component of autonomous vehicle safety.

Also Read: David Ellison’s Paramount Offer Rejected by Warner Bros For 8th Time