In a significant move to bolster its safety infrastructure, OpenAI has officially launched a search for a new Head of Preparedness, an executive role designed to serve as a critical gatekeeper against the emerging risks of advanced artificial intelligence.

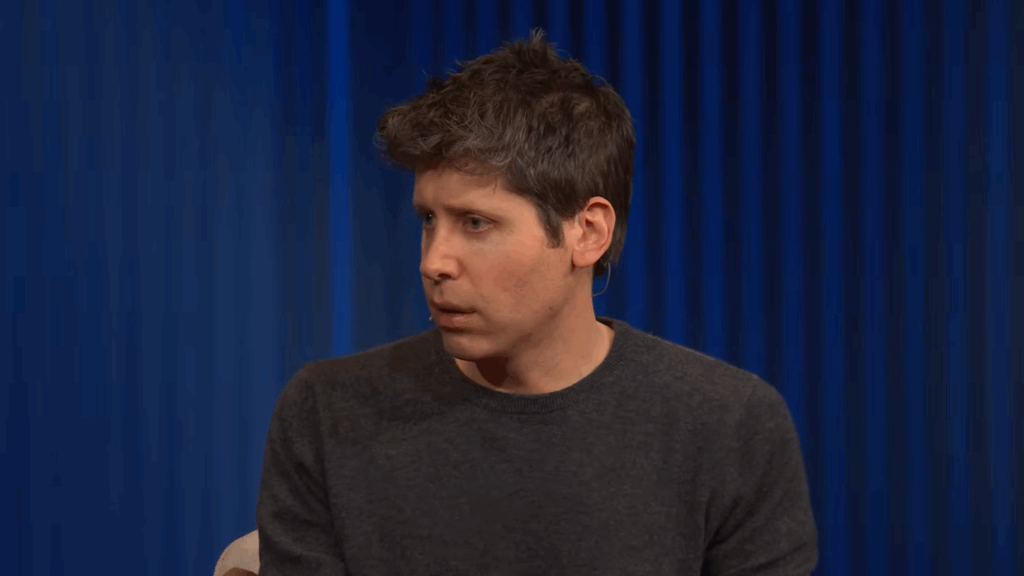

As first reported by Business Insider and further detailed in a recent announcement by CEO Sam Altman, the position carries a base salary of $555,000 plus equity—a figure that reflects the immense responsibility and high-pressure nature of the task.

This creates a unique space not only within OpenAI’s corporate structure but also within the broader work ecosystem that underpins most AI companies. The need for a “Head of Preparedness” seems driven by the urgent need to develop a frontier AI model while staying ahead of rival AI companies.

OpenAI CEO Sam Altman Launches a New Role of “Head of Preparedness”

Sam Altman is gearing up to look for a new Head of Preparedness at OpenAI, as the company progresses towards developing frontier AI models and data centers that support the same. The recruitment effort comes at a pivotal moment as AI models transition from being purely informational tools to systems with increasingly autonomous capabilities.

According to the report, the Head of Preparedness will lead the technical execution of OpenAI’s “Preparedness Framework,” a rigorous set of internal protocols updated in 2025. This framework monitors “frontier risks” across four primary domains: cybersecurity, chemical/biological/nuclear (CBRN) threats, autonomous replication, and AI self-improvement.

Sam Altman characterized the vacancy as a “critical role at an important time,” acknowledging that while models are delivering unprecedented benefits, they are also beginning to present tangible challenges. Specifically, Altman noted that newer models have become proficient enough in computer security to discover critical vulnerabilities and have shown a “preview” of complex psychological impacts on user mental health. The new executive will be tasked with identifying these thresholds and, if necessary, delaying or restricting product launches that pose a risk of severe harm.

Related: OpenAI Predicts Bridging The AI Deployment Gap In 2026

Does OpenAI really need a Head of Preparedness?

As per a Business Insider report, this search follows a period of notable leadership turnover within OpenAI’s safety divisions. The position has remained largely unfilled since the summer of 2024, when former safety leaders were reassigned or departed the firm. By formalising this role now, OpenAI is signaling a return to a “safety-first” architecture, ensuring that as its models grow more powerful, the human-led oversight mechanisms grow equally robust.

Ultimately, the search for a Head of Preparedness is an exercise in professional accountability. It highlights a mature recognition that the next frontier of AI, that is, inference and autonomous agency, requires a deeper, more nuanced understanding of how technology can be abused. By investing in top-tier talent to “stare into the abyss” of potential risks, OpenAI aims to foster an environment where innovation and safety are not competing interests, but two halves of a single, responsible mission to benefit humanity.

Related: Sam Altman Is Upset Over His “Your Year With ChatGPT” Report